Results 21 to 40 of 41

-

17th December 2011, 08:28 AM #21

- Join Date

- Dec 2008

- Location

- New Zealand

- Posts

- 17,660

- Real Name

- Have a guess :)

Re: pixel pitch, Airy disk diameter and maximum aperture

-

1st February 2012, 09:47 PM #22

- Join Date

- Jan 2012

- Location

- Kane, PA USA

- Posts

- 59

Re: pixel pitch, Airy disk diameter and maximum aperture

Hi

The tutorials from the above banner from this site have a wonderful discussion on Airy's disk!

Tim

-

2nd February 2012, 06:51 PM #23

Re: pixel pitch, Airy disk diameter and maximum aperture

Yep, I agree with the Blue Boy, but then I am probably as thick, and I am originally from Manchester too! Double whammy!

-

2nd February 2012, 09:04 PM #24

Re: pixel pitch, Airy disk diameter and maximum aperture

I strongly suspect that in the real world, this is largely ignored. There are many times when the best absolute resolution is not the important criteria. Some images beg for large depth of field (DOF), for others a shallow DOF may be more effective. A poll was taken on another forum a while ago, and the question was "which mode do you use most often"? The choices were fully automatic, aperture priority, and shutter priority. The most common choice was aperture priority, as it seems that more photographers want to control DOF than any other adjustment.

Having said that, suppose one wishes to know at what aperture, the (lens + sensor) system is becoming diffraction limited. This is relatively simple.

Go to: http://www.photozone.de/Reviews/overview

Select your camera brand, body and lens combination. Then scroll down to the MTF (resolution) chart with the blue and magenta vertical bars. The beauty of these tests is that they take into account the lens + sensor combination (which is what we actually use).

An example is my 30D + 100 mm f/2.8 macro lens:

http://www.photozone.de/canon-eos/16...review?start=1

The sharpest aperture is f/5.6 where (lens plus sensor) performs in the Excellent range. At f/2.8 it's not quite as good but is still Very Good. A f/16, it's in the lower range of Very Good. But at f/22, it has fallen to Fair.

At the widest aperture (f/2.8) the (lens + sensor) system is refraction limited; at the smallest aperture (f/22) it is suffering from diffraction limitations. Although the tests are done in lab conditions, in a sense they are real world results because they test the lens + sensor together. They can't be separated.

Glenn

-

8th February 2012, 04:42 AM #25

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: pixel pitch, Airy disk diameter and maximum aperture

An excellent question which seems not to have been answered if I read the posts correctly. The difficulty introduced with this question is having to consider optical theory in combination with sampling theory. Lens diffraction at small aperture diameters is well covered all over the net and Wikipedia's offering is as good as any. However, lens diffraction occurs independently of the sensor, by that I mean a piece of toilet paper in the film plane would show exactly the same blur, right? So we're left with Rayleigh's criterion, the definition of which can also be found anywhere. To me, the criterion defines the minimum angular width of some detail in the subject, for example the spacing between posts on a picket fence; the which spacing or pitch we could call a "cycle".

When it comes to sensors, their limit is the so-called Nyquist frequency, for which the most understandable units are cycles per pixel and that Nyquist limit is 0.5 cycles per pixel or inversely and more to the point, two pixels per cycle. If the limit is exceeded, nasty things can happen to the image, due to aliasing.

Based on the foregoing, I venture that the answer is choice no. 2) in the originating post.

Of course, we will realize that the contrast of these just-resolved details will not be very high, someone else might know the MTF at these limits: it would surprise me if it were greater than 10%.

This is my first post here, hope it makes sense.

TedLast edited by xpatUSA; 8th February 2012 at 04:51 AM. Reason: getting old . . .

-

8th February 2012, 07:50 AM #26

- Join Date

- Dec 2008

- Location

- New Zealand

- Posts

- 17,660

- Real Name

- Have a guess :)

Re: pixel pitch, Airy disk diameter and maximum aperture

Well done Ted - however you still lost me after "An excellent question"

-

8th February 2012, 09:37 AM #27

Re: pixel pitch, Airy disk diameter and maximum aperture

Although lens tests on the Web indicate that the one that is usually on my camera seems best at around f6 to f7, it seems to me that the wisdom in this thread is in the image and words of posts #6 and #7.

Philip

-

9th February 2012, 05:08 PM #28

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: pixel pitch, Airy disk diameter and maximum aperture

Well, I've just read here http://luminous-landscape.com/tutori...solution.shtml that the MTF at Rayleigh's criterion is a low 9% (MTF = contrast ratio, sorta). Since the OP said "begins to affect" my earlier choice of answer #2 was quite inappropriate

. "begins to affect" implies an MTF of more like 80% or at least the oft-quoted 50%. As far as aperture setting is concerned, it's generally accepted (as we know) that f/8+ is when diffraction effects "begin to affect" lens resolution.

. "begins to affect" implies an MTF of more like 80% or at least the oft-quoted 50%. As far as aperture setting is concerned, it's generally accepted (as we know) that f/8+ is when diffraction effects "begin to affect" lens resolution.

In the link, they say:

And, in Table 2, they mention no less than 4 pixels per Airy disk diameter for Bayer arrays! I find the table most interesting, it's well worth some study, rather than a quick glance. For example, at f/5.6 in blue light, your camera needs a 1.4 um pixel pitch which could hard to come byThen, the general rule for an optimal sampling is 2 pixels per Airy disk diameter in monochrome sensors, which match the Nyquist rate of 2 pixels per line pair. In practice, higher sampling frequencies don’t increase the resolved data

So, now, my choice from the OP's list of answers is "none of the above"

best regards,

Ted

-

9th February 2012, 07:47 PM #29

- Join Date

- Dec 2008

- Location

- New Zealand

- Posts

- 17,660

- Real Name

- Have a guess :)

Re: pixel pitch, Airy disk diameter and maximum aperture

In my opinion, folks who write about "which aperture is sharpest for a given lens" are mostly putting the cart before the horse. In the VAST MAJORITY of cases, I'm selecting an aperture for depth of field control - NOT to minimise defraction.

What on earth is the point of having a shot slightly sharper (which I might add is completely sampled out when the image is displayed onlineat normal size) (or unresolveable by the human eye when printed in a small to medium print) when the entire image is completely ruined by having an inappropriate depth-of-field?

-

9th February 2012, 10:21 PM #30

Re: pixel pitch, Airy disk diameter and maximum aperture

The resolution of the sensor isn't what changes. The sensor resolves (Up to its resolution, of course) what is being projected on it, be it noticeably sized diffraction patterns, a well resolved image or whatever else (But as far as I know most digital cameras include an anti aliasing filter). The resolution limit of an ideal lens possibly sampled by a digital image sensor depends on how you define it and it is subject to the heap paradox. You have already noticed that different sources put the threshold at different values/formulas for the same system as there is no single correct one. Notice that the image loose sharpness way below a reasonable "resolution limit". To define a threshold is again like defining a threshold between heaps and not heaps.

-

10th February 2012, 06:06 PM #31

Re: pixel pitch, Airy disk diameter and maximum aperture

So much is written about lens acuity, pixel size and number as well as lens diffraction and every other thing impacting IQ.

Honestly, how sharp do we need our images to be?

Or in other words; do I need the 231 mph Brabus Mercedes 800E to make a trip to the market or even to drive to my daughter a few hundred miles distant?

-

12th February 2012, 07:36 AM #32

Re: pixel pitch, Airy disk diameter and maximum aperture

One might ask, "is it about photography or the equipment"?

I can attest to the problem - my images did not improve from the 30D to the 5DII. And when I went from a sixteen year old Pontiac to a five year old BMW, I don't get there any faster.

Glenn

-

12th February 2012, 09:36 AM #33

Re: pixel pitch, Airy disk diameter and maximum aperture

Exactly.

While it is mildly interesting to read about the technical side of photography I think getting too technical and too concerned over it ruins the creativeness that should be at the core of every image.

I've said it before, I'll say it again and I'll keep on saying it until I'm blue in the face - we should all get out more and take more pictures. That is the only way our photography will improve.

-

12th February 2012, 02:33 PM #34

- Join Date

- Aug 2010

- Location

- Birmingham, Alabama USA

- Posts

- 135

Re: pixel pitch, Airy disk diameter and maximum aperture

Pixel pitch, airy disk diameter and maximum aperture... makes my head hurt. My personal rule (used with the 40D/50D/5D2) is nothing tighter than f/16 on the crops and f/22 on full frame. It is simple and serves me well. I can see some diffraction degradation, but at times the DOF requirements are such that stopping the lens down is required and the sharpness of the files is still entirely acceptable.

-

12th February 2012, 03:14 PM #35

- Join Date

- Mar 2009

- Location

- West Yorkshire

- Posts

- 156

Re: pixel pitch, Airy disk diameter and maximum aperture

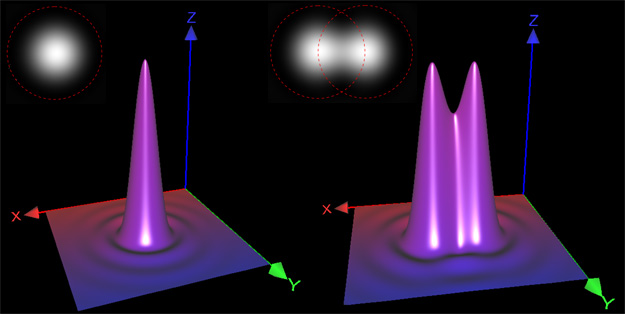

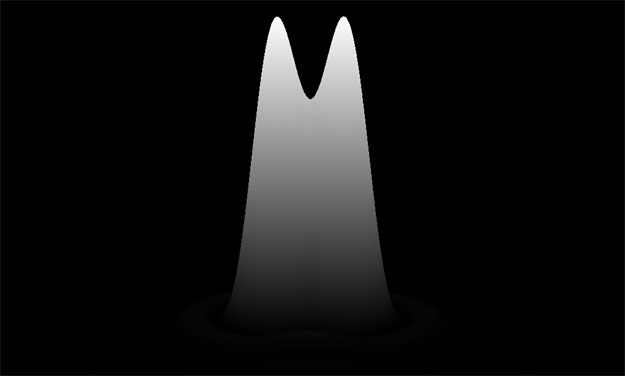

Its definitely none of the above. In the original post the image is wrong in the first diagram.

The third pixel detects the dark gap between two points? Which dark gap? The problem is that the peaks are overlapped such that the point between the two peaks is as bright as the peaks because it gets half the light from the blue curve plus half the light from the red curve. The Airy discs just have to be further apart for the dark gap to be there, so you can't detect it in this situation. See Dawes Limit for further information, where studies were done on the possibility of resolving two close together stars through a telescope.

-

12th February 2012, 03:50 PM #36

Re: pixel pitch, Airy disk diameter and maximum aperture

Not exactly - about 74% of the peak along the line crossing peaks, outside it even less and much less.

However given pixel catches a bright peak and it's darker neighbour, or dark gap between peaks (minimum) and its brigter neighbour:

Because pixels are not points, they cover larger area. Then perhaps sumarized or average I/Ios detected by a pixel covering peak and a pixel covering gap are really very similar. On the line crossing maximums the gap looks even like a mirror of a peak.

However if their colors differ, they can be seen as separated even the gap gives same brightness as a peak. For example red peak and green peak and almost yellow (red + blue) gap between them. But pixels are covered by special filters and color at given point (pixel) is calculated on the basis of brightness of several adjanced pixels, covered by different filters, what makes everything more weird ... This is really, really very complex issue, I can't imagine it at all.

The main problem is that we try to accomdate theory taken from classical photography to digital one, where an image is not continuous but split between separated pixels. Maybe kind of statistical math calculating average differences of brightness for all possible positions/directions of lines or points could solve this problem. It seems to be very complex.

And answer to this question is strictly theoretical, not needed for anything in practice ...

Maybe would be better to give up some of terms and theory used in classical photography, like resolution expressed in line pairs per mm etc. This is only our strong habit.Last edited by darekk; 13th February 2012 at 01:28 AM.

-

14th February 2012, 04:14 PM #37

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: pixel pitch, Airy disk diameter and maximum aperture

Must agree with you on that point. Especially with reference to lp/mm, pure obfuscation! As a newcomer to photography, having never learned serious film stuff, I hate stuff that's forever related to 35mm e.g. "crop factor", cosy comparisons to "Velvia" (whatever that is) and assumptions that the pic is going to printed 8x12 on a 5760 lpi printer and viewed at exactly 250mm without actually saying so, e.g. DOF articles or even the stupid markings on your lens.

Darek, do you make those tech. images yourself? They're most impressive.

Ted

-

14th February 2012, 05:33 PM #38

Re: pixel pitch, Airy disk diameter and maximum aperture

I disllike it too, actually hate this term.

Yes. First chart was made using Graph/, other icluding blur spots using Graphing Calculator 3D Free Edition, and Adobe Photoshop Elements and Corel Draw.

-

14th February 2012, 05:56 PM #39

- Join Date

- Mar 2009

- Location

- West Yorkshire

- Posts

- 156

Re: pixel pitch, Airy disk diameter and maximum aperture

Totally with you on the Rayleigh Criterion bit Darekk, assuming the peaks line up with the centre of your pixel that is, but that's often not going to be the case. If we want to discuss the theory of it, we'll have to consider what happens if the peaks in the beautiful diagrams land on the junction of two pixels. (Ted was spot on there, they're lovely illustrations) Also we'll have to consider the case where the peaks aren't lined up with the pixel axis.

Shall we leave the Bayer matrix out of this for now? ;-)

-

14th February 2012, 06:11 PM #40

Re: pixel pitch, Airy disk diameter and maximum aperture

Helpful Posts:

Helpful Posts:

Reply With Quote

Reply With Quote