Results 41 to 60 of 65

-

29th August 2017, 09:21 AM #41

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: THANK YOU - SUPPLEMENTARY OP: Nikon D5xxx - 12-bit vs 14-bit

-

29th August 2017, 01:01 PM #42

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: THANK YOU - SUPPLEMENTARY OP: Nikon D5xxx - 12-bit vs 14-bit

If it's any help, Irfan View used to extract the embedded JPEG from Sigma X3F files because it could not convert the Foveon raw data. Sigma's embedded JPEGs can be Y'CbCr 4:2:0 which is the lowest chroma sub-sampling possible, usually. Later cameras upped that to 4:2:2, better but not best which is 4:4:4.

https://en.wikipedia.org/wiki/Chroma_subsampling

As to NEF, I have no idea and am offering the above only as a hint about your "lower quality".

Blind guess, your NEF JPEG may be the same size pixel-wise but highly compressed.Last edited by xpatUSA; 29th August 2017 at 02:12 PM.

-

29th August 2017, 02:06 PM #43

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

-

29th August 2017, 02:17 PM #44

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: THANK YOU - SUPPLEMENTARY OP: Nikon D5xxx - 12-bit vs 14-bit

Not yet, maybe tomorrow.

Don't know what is meant by that. The HEX view?I mean IrfanView can find its way through the file structure.

Can't, I don't shoot Nikon.You can try it with others viewers. Probably the same.

George

Please ignore everything I said, was only trying to help.

-

29th August 2017, 03:47 PM #45

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: THANK YOU - SUPPLEMENTARY OP: Nikon D5xxx - 12-bit vs 14-bit

Every file has a predefined structure. From the first bit info units are declared: structure and size. And there's a part third parties can make use of for their own declaration. Just a google on "tiff file structure" http://www.fileformat.info/format/tiff/corion.htm.

George

-

29th August 2017, 06:25 PM #46

-

29th August 2017, 06:40 PM #47

SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

Article and follow-up

Hello Every CiC-member,

This is to convey thanks to everyone who has been contributing actively during this Think tank, OP of mine! I appreciate your input and time!

I have read all posts with interest as well as viewing the figures, snapshots! I do like figures and models.

The effort here has impacted me positively, and before I reveal how, if I will continue with capturing 12-bit or return to 14-bit NEF/RAW, I wish, as we are rolling(!), to share an article and lay two follow-up items on the table:

FOLLOW UP

(A) When I first started out with the Nikon 5600, and I was going to decide if I would utilize 14-bit or 12-bit, I did a deep research and read among other things the following article; where the author have made tests (as you will see from it) comparing the results from 12-bit lossy, 12-bit lossless, 14-bit lossy and 14-bit lossless capturing.

Your inputs above have been useful to me (actually changed how I will operate the D5600) and thus I would like to share the following article for serious scrutiny.

What do you think, does the author have a point with his article and testing, or does he miss something? (What I can tell is that some liked and some criticized a similar article, as always )

)

https://digital-photography-school.c...right-for-you/

(B) As a photographer, systems engineer and human being, I cannot resist to lay the following final items on the table .

.

Since we are so many competent people here in one place: We have learned about the pros and cons regarding 14-bit lossless compressed versus 12-bit lossy compressed when it comes to editing, post processing and perhaps IQ!

However, when talking about small and large file sizes, possibilities during editing, etc: Can we here conclude (with a rationale please) what compression technique comes on second place and what comes on third? Is 12-bit lossless compression better than 14-bit lossy, or is the other way around?!

Note that this is not an academic question – since we have learned here that some cameras (like the Nikon D5600) shoot 14-bit lossy and some (Canon xxx) shoot 12-bit lossless without any other alternatives! Without stating more during this part of the OP, I (as a systems engineer) can appreciate that this may not be settled exactly due to reasons of e.g. different implementations, compression algorithms. However I believe if the rest is created equal (Occam's razor), and based upon how the compression algorithms work on e.g. shadows and highlight, that at least a “Rule of thumb” can be established by us here!

So condensed, do we believe that 12 bit lossless compression is the second best alternative or is it 14-bit compression lossy which takes that place.

Thank you for your cooperation, from Scandinavia!

-

29th August 2017, 06:47 PM #48

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

-

29th August 2017, 08:56 PM #49

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

In general I agree with the article.

There are two very important factors that I think need stressing. Firstly the subject and dynamic range of the scene being photographed. The test done in the article were of a scene with a fairly limited dynamic range. Had a very high-dynamic range scene (such as a sunset being reflected in city buildings) I am sure the advantages of 14bit over 12 bit would become more apparent. Such a scene would also have hard edges which would also make any loss of definition in the compressed files more obvious. Unless the photograph being taken is pushing the limits of the sensor the choice of RAW file is probably not overly important.

Secondly is the use/output of the image. If it is to be printed on a high print quality large billboard and viewed closely it will require the best possible RAW file and very careful PP. The same image only printed no bigger than A5 in newspaper will get away with a compressed jpeg file as the source file. When I was doing photography for Real Estate the final use of the images made a difference to how the photographs were taken, the time it took to take and edit them and subsequently the amount the client was charged.

P.S. Under exposing and over exposing in the test are not truly equivalent to photographing a HDR scene.Last edited by pnodrog; 30th August 2017 at 12:34 AM.

-

30th August 2017, 02:16 AM #50

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

+1 to Paul's comments. I tend to read the article much in the same way he does. On the other hand, writing about 8-bit / 12-bit / 14-bit and compressed versus uncompressed and then displaying all of the sample shots as 8-bit lossy compressed jpegs is a bit much. Small wonder we don't notice any difference between the images...

There are a couple of places where the author has completely missed the boat and that is the influence on colour space on the final image quality.

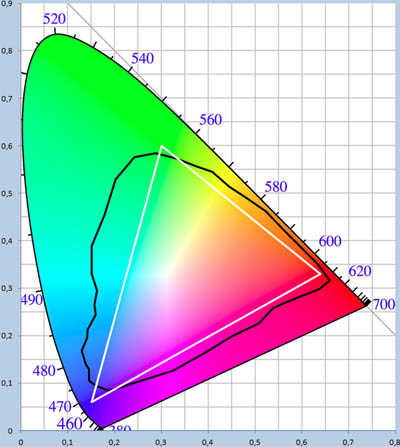

Raw data is not assigned any colour space, as all we are dealing with is data. Once we convert that raw data into an image file we assign both a colour space and a colour temperature, and these are "baked" into the image file. Regardless of the quality of data that the camera's sensor has captured and stored as raw data, much of this can be thrown out when the colour space is assigned when the data is converted into an image file. Select the sRGB colour space and one can only reproduce about 35% of visible colours; AdobeRGB just over 50% of the visible spectrum and ProPhoto, virtually 100% of naturally occurring colours.

The boundaries of the colour space diagrams represent the absolute limit of the shades that can be displayed and the number of bits used define the number of discrete colours inside that envelope.

The other issue is the output device used. A standard computer display will be able to reproduce the sRGB colours while some high end screens come close (+99%) of the AdobeRGB colour space. If you use a run of the mill commercial printer, most use only sRGB colour space and a few higher end ones will support AdobeRGB colour space. High end professional colour photo printers will come closer to reproducing the ProPhoto colour space.

The other issue is editing images, especially when using a wide-gamut colour space, like ProPhoto. More data is better as the photo editing software will change the values of individual pixels. Push an image with too little data and you will see artifacts and colour blocking.

As I stated in a previous posting, saving the highest quality data possible makes the most sense as storage costs are relatively low. How specific camera model compression algorithms are implemented, that is something that can only be determined by some serious testing. Fortunately, my cameras offer 14-bit lossless compression, so that is the direction I have taken.

-

30th August 2017, 06:03 AM #51

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

I've never been able to find any really convincing data on the differences between 12 and 14 bit shooting. So I just use lossless compressed 14 bit - because I can and it is potentially the best!

I have read that noise in an image can have an effect on the differences in posturisation that might occur between 12 bit and 14 bit data (but you would probably need fairly extreme processing to even see it). If the noise level is greater than the least significant bit, you get a dithering effect that can mask the transitions. FWIW.

Dave

-

30th August 2017, 10:15 AM #52

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

I just keep having problem with this approach of the color space. Being a fan of a simplified approach I wrote this down for myself. It's a continuing process

1. Sensor captures light for 3 colors R,G and B. In the sensor that light, wavelength, is translated into a currency.

2. The analogue currency is digitized. In a A/D process one needs a range and a divider, the tonal depth. In the RAW process mostly a choice between 12 and 14 bit. By changing that range the camera simulates a higher sensitivity, ISO.

3. In the demosaicing process pixels with a value for R,G and B are created. The digital picture is born.

4. Processings are done with these digital RGB values. Starting in the converter this is done with a bitdepth of 12 or 14, when using a JPG it will be 8 bit. In memory probably 8 or 16 bit will be used as being logical units.

5. The digital picture is send to an output device. The digital values are analoguized in a D/A process. Again an analogue range is needed. The output device determines the range and tonal depth. One can read the digital value as the used percentage of the tonal depth, bit depth.

In that process to the output the D/A is done. It doesn't matter if that done in the pc or in that device. Important is to know that fot that process a range has to be defined. That range is defined by the output device, the ability to produce a light with a minimum and maximum wavelength. If that range doesn't fit the range of the input, you'll see something else as in reality, wrong colors. The color space is a correction of that range. I'm not free to choice a color space, I must select one based on my output device.

George

-

30th August 2017, 12:59 PM #53

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

Not quite George. The device capabilities are referred to as the gamut whereas colour space is effectively a mathematical model that tells a device which shade to assign to particular pixel value. Whenever the value of the data exceeds the device's ability to reproduce a specific colour, we refer to it being "out of gamut".

That is handled by the device (through its drivers) as well. If you have read or heard about the "rendering intent", this is how this is handled. Our devices (screens and printers) let us select the most appropriate rendering intent; in photography we use two different ones - perceptual and relative colormetric.

The perceptual intent takes all of the colours and remaps them so that they can be displayed or printed. This means all the colour values could end up being shifted. With the relative colormetric approach, only the out of gamut colours are changed and are taken to the value that can be displayed. I do pay attention to this when printing as I find that images with people in them tend to work better with the relative colormetric approach and images with brilliant colours look better when using the pereptual rendering intent. And yes, one can usually see the difference.

Added: https://msdn.microsoft.com/en-us/lib...(v=vs.85).aspx

Good, brief description on rendering intents.

It depends. If you are still using a VGA connector to your computer screen this is correct, but all my devices use digitial inputs and outputs so there is not D/A conversion. The only A/D conversion in my work flow occurs in the camera, where the sensor is an analogue device.Last edited by Manfred M; 30th August 2017 at 02:32 PM.

-

30th August 2017, 01:36 PM #54

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

As to "naturally occurring colours" there does exist Pointer's Gamut which is said to represent those colors:

http://www.tftcentral.co.uk/articles...#_Toc379132054

There, it is said that sRGB covers 70.2% of that Gamut and Adobe RGB (1998) 80.3%.

As to ADC bit-depth, there is a metric "effective bit depth". While many of us fondly imagine that zero sensor output gives zero count at the ADC output, that is far from the case. Analog Devices publishes data sheets which give that number - for one of my cameras, it's 12bit ADCs are actually 10-1/2 effective bits.

As to "raw" bit depth in the file, another of my cameras writes raw data to the memory card in the range of 0 to about 2330!! (fractionally just over 11bits or 1/2 a stop lost, sorta)!! . . So you know that there's some in-camera shenanigans going on there . . Oddly enough, that same camera is one of my best for on-screen color, albeit sRGB.

Oddly enough, that same camera is one of my best for on-screen color, albeit sRGB.

Last edited by xpatUSA; 30th August 2017 at 01:43 PM.

-

30th August 2017, 02:24 PM #55

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

Pointer's gamut is an interesting concept that for a variety of reasons has never become mainstream (nor have I seen any validation of his work). CIE 1931 xy or CIE 1976 u’v’ are the baseline most serious colour work use as a base reference, so I will tend to stick to that.

The whole concept of "naturally occurring colours" and "human colour vision" are conceptually hard to validate to begin with. I strongly suspect that the statistical sample of the work that Wright and Guild did in the 1920's (input to the CIE standards) would be suspect by today's standards as their sample would likely have been biased towards Caucasians (and in fact likely males). Today's sampling would have a more global (all races) and gender mix. Some of the age related colour vision deterioration might not have been picked up or even purposefully excluded. etc. etc. etc.

Regardless the CIE standards drive a lot of colour work, so I will tend to favour it because it is such a generally accepted baseline

-

30th August 2017, 03:36 PM #56

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

It was your good self that mentioned "naturally occurring colours" and I merely sought to expand on that mention because you compared two apples with an orange for some reason.

Your response, by questioning the validity of Pointer's Gamut", casts doubt on the validity of my post to an extent. Michael R Pointer is a well respected color researcher and his findings are not to be taken lightly, IMHO.

Source from an article about displays and Rec. 2020:For a 1980 study, a British color researcher named Michael R. Pointer gathered more than four thousand objects with very saturated colors, including more than twenty-five hundred paint swatches from various collections and hundreds of colored textiles, papers, and plastics. Pointer shined light of various wavelengths and brightness levels on the samples and measured the reflections. By charting the results, he produced a good approximation of the range of colors one could ever expect to see.

http://www.newyorker.com/tech/elemen...missing-colors

Did you know that the Pointer gamut was one of the datasets used by Kodak when developing ProPhoto RGB?

http://www.rit-mcsl.org/Mellon/PDFs/...R_May_2014.pdf

I claim that Pointer's Gamut is good enough to serve as a reference for the rest of us, insofar as color space coverage is concerned.Last edited by xpatUSA; 30th August 2017 at 04:13 PM.

-

30th August 2017, 10:56 PM #57

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

Colour space is irrelevant when discussing RAW file size.....

-

30th August 2017, 11:41 PM #58

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

Ted - I was not questioning Pointer's work per se, but rather the underlying assumptions that we make about statements like "naturally occurring colours" and "human vision". Is the spectral line from a gas discharge lamp a naturally occurring colour? What about the iridescent colours we can see in certain jelly fish or tropical fish?

I had similar questions about the research that went into the CIE standards; are these "ideal" standards based on the colours a young female with optimal colour vision? Were they based on a statistical sample of a particular group or subgroup of test subjects, etc.

The problem I have run into many times, mainly professionally is when people quote or refer to specific standards to justify their position. Upon closer examination, one can often find that the standard being touted is not applicable for the purpose that has been proposed.

Bottom line is I want to understand what a "natural colour" is and what the colour sensitivity of "human vision" is.

-

31st August 2017, 04:11 AM #59

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

I think that we have drifted well off-topic and ended up with too many rhetorical questions that can be addressed quite easily by ordinary research. One from me: what about fluorescent colors?

Here's one answer about the 1931 2-degree Observers.

Those numbers of subjects would make modern pollsters turn in their graves (population, sample size, confidence level, margin of error, etc).In the 1920s two color scientists, W. D. Wright and J. Guild, each performed similar color vision experiments. Wright performed his experiment on 10 subjects, Guild used 7.

As you said, in the 1920's, they were probably well-nourished healthy males - rich enough to spend their time thus instead of scratchin' a living . . .

https://medium.com/hipster-color-sci...y-401f1830b65aLast edited by xpatUSA; 31st August 2017 at 06:21 AM.

-

31st August 2017, 05:37 AM #60

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: SUPPLEMENTARY OP II - Lossy vs Lossless vs 12-bit vs 14-bit

As to seeing the difference on-screen, there is (was?) a well-known anomaly with reference to perceptual intent if the embedded ICC profile is of the simple matrix type - which is the most common for profiles of type 'display'. The anomaly is that the 'perceptual' tag in the profile is ignored by Photoshop, etc, and what you get is relative colorimetric whether you like it or not. This anomaly is not found in print profiles which normally have CLUTs.

It may be possible that this anomaly has been fixed since about 4 years ago when I could not understand why some of my highly-saturated flower shots were saturation-clipped even though I told my editor to use perceptual intent.

P.S. found this:

https://photographylife.com/the-basi...or-calibrationMatrix profiles have information for only relative colorimetric intent transformation.

https://ninedegreesbelow.com/photogr...#matrix-vs-lut

.Last edited by xpatUSA; 31st August 2017 at 05:57 AM.

Helpful Posts:

Helpful Posts:

Reply With Quote

Reply With Quote