Our modern digital cameras are, in theory, capable of capturing all the colours humans can see. Our output devices, whether they are our computer screens or printers are far more limited in their capabilities. We refer to the colour range that our output devices can reproduce as their gamut. If our image contains data that cannot be reproduced by our specific output device, that colour is referred to as being “out of gamut”, often abbreviated as OOG.

Our digital cameras captured data, which must be turned into an image file before it can be viewed. Part of that conversion process requires that the data be assigned a colour space, which tells our software how to interpret the colour data our camera have captured. Even the most basic cameras can turn out sRGB JPEG images. More advanced camera can also produce JPEG images that use the AdobeRGB colour space. Anyone who uses the Adobe’s Lightroom will be working with a variant of the ProPhoto RGB colour space.

The standard that we use to compare colour spaces is the CIE Lab 1931 colour space, which is a good representation of all the colours that humans can see. The sRGB colour space can reproduce about 1/3 of the colours of CIE Lab 1931, AdobeRGB can reproduce around ˝ of the CIE Lab 1931 colour space and ProPhoto RGB can reproduce about 90% of the colours in the CIE Lab 1931 colour space. As colour spaces get wider, they will display more vibrant colours than the narrower colour spaces.

We can convert our images from one colour space to another using software tools, but the conversion process is uni-directional. A wider colour space can be converted to a narrower one, but in doing so, that data is thrown away and cannot be recovered. While we can take a narrower colour space, say sRGB and convert it to a wider one, say AdobeRGB, all of the colours will be the sRGB colours that have been mapped to AdobeRGB values.

When we display a colour space that is wider than our output device can handle, our operating system or print drives use the assigned Rendering Intent to handle out of gamut colours. Operating systems use the Relative Colormetric rendering intent, which leaves the colours that are in gamut unchanged, but clips the out of gamut colours to the edge (sometimes referred to as the hull) of the colour space.

We do something similar when we convert colours to the same colour space as our computer screens, and if we do no further editing after conversion, then both paths will give us virtually identical results when we look at our computer screen.

If we edit these images, using a wide gamut colour space gives us the advantage of using the all the colour data in the original image. Colours that were OOG in the original image can come back to being in gamut when edited. When using a wide-gamut colour space, the original colours will be preserved and the correct colours will appear on the screen. If the edits are made to data in a smaller colour space, then the data used by the editing program will use data where the rendering intent has changed the colours and less accurate colours will come out of the editing process. A colour channel that has been clipped or blocked cannot be recovered.

Looking at some examples to try to explain this visually:

Assuming that this example of “pure” values of Red, Green and Blue. The outside rectangle shows wide gamut colours that cannot be displayed on a normal sRGB screen, but the inner square has colours that are just barely in gamut and can be displayed on a standard sRGB screen. This is essentially what we would see if we displayed this data on and AdobeRGB compliant screen.

Now if we decide to display this data on an sRGB compliant screen, we could follow one of two approaches. We could convert the data to the narrow sRGB screen or we could just leave the data in a wider gamut colour space and let the operating system rendering intent take care of the out of gamut colours. The two approaches will give exactly the same output on the sRGB screen:

The upshot of this is that if one is not planning to edit the data, either workflow will give identical results from an end user standpoint. On the other hand, if one edits the image and some of the data comes into gamut through the edit, this information will be accurate and will be displayed if one is working in the wide gamut colour space. The narrow gamut colour space will not have this data and the colours will not be accurate. In this example, the large vertical rectangle shows the output on the sRGB screen of both of these scenarios.

The first image shows an emulation of what happens if we work in a wide colour space and some of the colours come into gamut due to the edit. As these colours were edited in the wide colour space, the out of gamut colours are still handled by the operating system and the rendering intent, but the colours that are in gamut after the edit are displayed accurately.

In the second diagram, the edit is made to data in the narrow colour space and the original data has been lost. The edit is applied to the narrow colour space, so some of the subtlety and colour accuracy of the original data has been lost.

Conclusion: Working in a wide colour space like ProPhoto RGB has advantages, even in cases where this colour space is larger than the computer screen can display. Converting to a narrower colour space, for instance when uploading an image to the Internet, is a necessary step in this workflow. Failure to do so can result in the colours not being displayed properly for other people viewing the image.

Results 1 to 20 of 80

-

30th November 2017, 02:25 AM #1

Why one should use a wide colour space when editing images on a narrow gamut screen

-

30th November 2017, 06:22 AM #2

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Interestingly, it would appear that Lightroom always works in a linear wide-gamut editing space, irrespective of what gets "assigned" or selected as a "working color space".

See http://www.colourspace.xyz/the-truth...ur-management/

It says:

It also says:Lightroom’s editing space accomplishes this by using the RGB primaries and white point of ProPhoto RGB. ProPhoto RGB is a huge RGB colour space, so big in fact that portions of it are outside the human visual range. It can easily encompass digital camera colour spaces.

Lightroom doesn’t use the actual ProPhoto RGB profile though. ProPhoto RGB has a gamma (or tone) curve of 1.8 whereas digital cameras capture using a linear 1.0 gamma. This means that as light intensity doubles so do the values recorded by the camera chip. By using a gamma of 1.0 Lightroom can edit raw images in their native gamma without need to convert them to a different gamma such as 1.8 or 2.2. Also using a gamma of 1.0 allows Lightroom to produce smoother blending when performing certain kids of adjustments.

Good old Melissa gets a mention there too.When processing a non-raw in Develop, the image is converted on the fly to the internal working space and all calculations are done in that space.

Last edited by xpatUSA; 30th November 2017 at 10:40 AM.

-

30th November 2017, 04:50 PM #3

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Reading that Adobe's Melissa was effectively ProPhoto RGB with a Gamma of 1.0 is what originally got me thinking about editing in a wide colour space, if for no other reason than Adobe knew more about photo editing than I did.

As I do print, I did notice a significant difference in the tonal range in some of my prints, so I knew that something was going on. Unfortunately, there is a lot of "noise" on the internet when it comes to colour spaces, gamut, printing, etc. so it took me some time to figure out what was really going on and frankly it extends beyond prints to what we see on the screen too. My findings are effectively what #1 is trying to show.

-

30th November 2017, 06:23 PM #4

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Manfred,

An excellent thread.

Ted, you wrote:

AFAIK, Lightroom does not have an option for users to select a working space. Internally, it uses Melissa.Interestingly, it would appear that Lightroom always works in a linear wide-gamut editing space, irrespective of what gets "assigned" or selected as a "working color space".

-

30th November 2017, 06:54 PM #5

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Manfred,

Firstly thanks for the effort you toke to explain your thoughts.

I'm not convinced, as you might have thought.

To start the trench battle again, a digital image doesn't have a color. The output device can show colors. The digital values of that digital image are telling the output device what colors to show based on its gamut.

The definition of the colors as in the horse shoe are analogue colors as a result of the combination of the individual R,G,B colors.

Based on the R,G,B colors the A/D conversion is giving a digital value representing the intensity of that specific color.

When converting between 2 color spaces, all pixels are recalculated and stay in the used bit depth range.

Well, that's mine summary.

If you writethen I think that's not correct. The data, I think you revere to the pixel values, can always be shown. The question is if they represent the real world colors as much as possible. Are the pixel values based on the right color gamut, that of your output device. And that counts for all colors, not just the extremes." If our image contains data that cannot be reproduced by our specific output device, that colour is referred to as being “out of gamut”, often abbreviated as OOG. "

I've tried to figure out why LR and others use a wide color space, even when a small color gamut screen is used. The only reason I can think of is that going from a wide color space to a smaller color space is easier as the other way.

I'll read it over again later. Has been a hard day.

George

-

30th November 2017, 07:57 PM #6

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

George - raw data has no colour, it is just data. Once that data is converted to an image file, it does have colour data as the colour space is assigned during the raw conversion process. Once that colour data has been assigned, the output device, whether it is a computer screen or a print will use that image file to display the data.

If the image file is not capable of reproducing the colour, which is device specific, this is what is referred to as out-of-gamut. The rendering intent remaps the out of gamut data into something that the device can reproduce. We can also use our editing tool to remap the colour values in the image's colour space over to a new colour space.

That's all that happens.

-

30th November 2017, 08:24 PM #7

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

I don't mean raw special. Though it contains a ColorFilterArray. Somehow there must be a kind of known "gamut" for those R,G and B filters so they can be translated to the output gamut. After the creation of the RGB compound colors.

Isn't out of gamut not a more printer specific phrase?

George

-

30th November 2017, 09:16 PM #8

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

No, it refers to any rendering device. For example, some of the colors in the Adobe RGB gamut and many of the colors in the ProPhoto gamut cannot be displayed on any of my computer monitors.Isn't out of gamut not a more printer specific phrase?

Moreover, it applies to the working space of the software. For example, if you set the working space to sRGB, colors that are outside that gamut are mapped to the sRGB gamut, and the additional information is lost.Last edited by DanK; 30th November 2017 at 09:22 PM.

-

30th November 2017, 09:36 PM #9

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

George the characteristics of the CFA filters can be measured in terms of their spectral response (ie response vs wavelength) and this information can be used to estimate the range of colors a camera can capture. But this is not what Manfred's thread is about. It is about processing and display of the captured data.

As Dan mentioned, the term gamut refers to the range of colors an output device such as a printer or monitor can render. This range is determined by the actual colors of the primaries - the red green and blue lcd pixels in a monitor and the inks in a printer (as viewed on the paper).

DaveLast edited by dje; 30th November 2017 at 09:44 PM.

-

30th November 2017, 10:19 PM #10

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

That's where I've problem with. All the pixel values are recalculated so that in that sRGB gamut the new colors are as natural as possible. Not only those colors that are out of the sRGB gamut looking at the horse shoe.

I was thinking of the printer as being an device which would print an image just as it is on the screen. Something like that.

George

-

30th November 2017, 11:58 PM #11

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

It depends on the Rendering Intent used with the conversion. Eg with Relative Colorimetric, as Manfred explained in post #1, in-gamut colors remain unchanged and the out-of-gamut colors get remapped to the edges of the new gamut boundary. With Perceptual Intent, all colors get re-mapped to fit the new color space. The latter is apparently useful when there are a lot of OOG colors in an image.

Dave

-

1st December 2017, 12:11 AM #12

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Manfred, thank you for a very clear and carefully written post.

-

1st December 2017, 02:50 AM #13

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Thanks, Dan . . so it uses Melissa for everything, then, not like it says in my earlier link?:

http://www.colourspace.xyz/the-truth...ur-management/

However Andrew Rodney, a well-known color guru and author wrote:

"Correct, there is no way to change ACR or Lightroom's processing color space which is ProPhoto RGB but with a different tone curve (1.0). Note this isn't Melissa RGB. That is the name for the color space only used to generate the RGB values (outside the soft proof) and Histogram. It is ProPhoto RGB gamut but with a 2.2 TRC. The internal RGB processing color space has no name."

I'm thinking of answering my own supposition based on the link and the above as follows:

LR doesn't use Melissa RGB for everything and Melissa RGB's gamma is not 1.0, it's 2.2. I've also read long ago that it's white reference is D65, same as sRGB . . .Last edited by xpatUSA; 1st December 2017 at 03:18 AM.

-

1st December 2017, 03:06 AM #14

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

If you accept the fact that it is a digital image (which you can't see so it is not an image) you also have to accept the fact that it will represent either monochrome or colour image. You are merely playing with language usage and this observation is completely irrelevant to the discussion. It's about as useful to the discussion as stating colour spaces used in computing don't have colours.

It would be more productive if you limited your discussion to aspects concerning photography rather than unnecessary semantics.

-

1st December 2017, 06:20 AM #15

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

That has nothing to do with what I have written about. You, I think, are asking questions regarding how the data from the sensor is turned into an image data. That is a step that occurs ahead of what I have written about and is irrelevant to that.

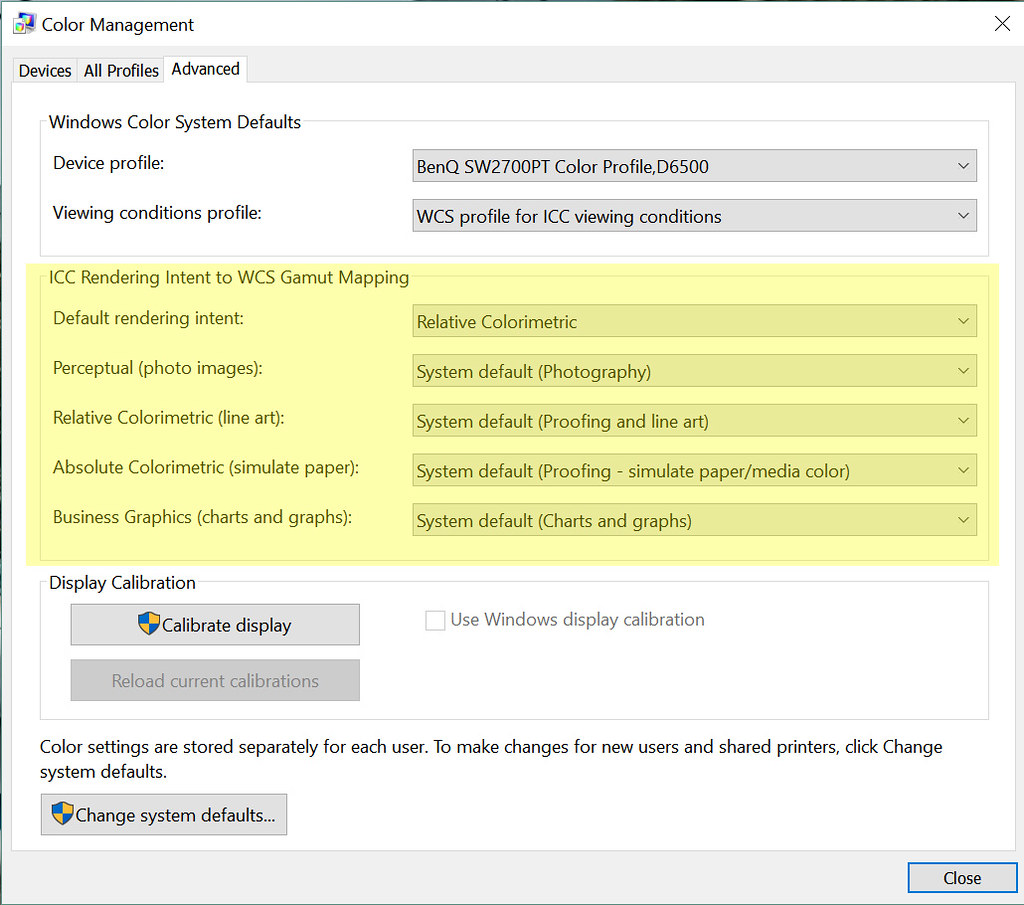

No it is specific to an output device, including your computer screen. This is a screenshot of the Windows 10 colour setting screen. Notice all the reference to Rendering Intents for different types of image data?

-

1st December 2017, 06:41 AM #16

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

-

1st December 2017, 06:48 AM #17

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

-

1st December 2017, 06:56 AM #18

- Join Date

- May 2014

- Location

- amsterdam, netherlands

- Posts

- 3,182

- Real Name

- George

-

1st December 2017, 07:11 AM #19

- Join Date

- Feb 2012

- Location

- Texas

- Posts

- 6,956

- Real Name

- Ted

-

1st December 2017, 07:49 AM #20

Re: Why one should use a wide colour space when editing images on a narrow gamut scre

Again George, the specifics of how these two rendering intents handle OOG colours is irrelevant to the topic discussed here. The important part is that both have specific roles in image editing and the choice is up to the person doing the editing and the final result he / she is after.

The important part is that these two rendering intents both eliminate OOG colours. The issues associated with wide versus narrow colour spaces is the same in both cases.

Helpful Posts:

Helpful Posts:

Reply With Quote

Reply With Quote