CAMERAS vs. THE HUMAN EYE

Why can't I just point my camera at what I'm seeing and record that? It's a seemingly simple question. It's also one of the most complicated to answer, and requires delving into not only how a camera records light, but also how and why our eyes work the way they do. Tackling such questions can reveal surprising insights about our everyday perception of the world — in addition to making one a better photographer.

|

VS. |  |

INTRODUCTION

Our eyes are able to look around a scene and dynamically adjust based on subject matter, whereas cameras capture a single still image. This trait accounts for many of our commonly understood advantages over cameras. For example, our eyes can compensate as we focus on regions of varying brightness, can look around to encompass a broader angle of view, or can alternately focus on objects at a variety of distances.

However, the end result is akin to a video camera — not a stills camera — that compiles relevant snapshots to form a mental image. A quick glance by our eyes might be a fairer comparison, but ultimately the uniqueness of our visual system is unavoidable because:

What we really see is our mind's reconstruction of objects based on input provided by the eyes — not the actual light received by our eyes.

Skeptical? Most are — at least initially. The examples below show situations where one's mind can be tricked into seeing something different than one's eyes:

False Color

False Color

Mach Bands

Mach Bands

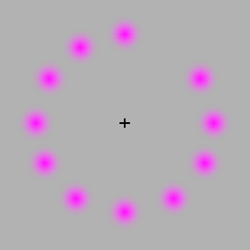

False Color: Move your mouse onto the corner of the image and stare at the central cross. The missing dot will rotate around the circle, but after a while this dot will appear to be green — even though no green is actually present in the image.

Mach Bands: Move your mouse on and off of the image. Each of the bands will appear slightly darker or lighter near its upper and lower edges — even though each is uniformly gray.

However, this shouldn't discourage us from comparing our eyes and cameras! Under many conditions a fair comparison is still possible, but only if we take into consideration both what we're seeing and how our mind processes this information. Subsequent sections will try to distinguish the two whenever possible.

OVERVIEW OF DIFFERENCES

This tutorial groups comparisons into the following visual categories:

The above are often understood to be where our eyes and cameras differ the most, and are usually also where there is the most disagreement. Other topics might include depth of field, stereo vision, white balancing and color gamut, but these won't be the focus of this tutorial.

1. ANGLE OF VIEW

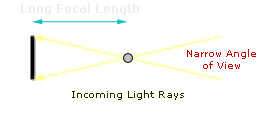

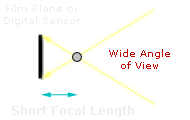

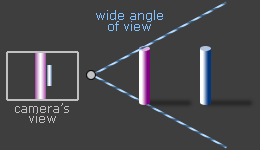

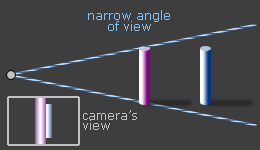

With cameras, this is determined by the focal length of the lens (along with the sensor size of the camera). For example, a telephoto lens has a longer focal length than a standard portrait lens, and thus encompasses a narrower angle of view:

Unfortunately our eyes aren't as straightforward. Although the human eye has a focal length of approximately 22 mm, this is misleading because (i) the back of our eyes are curved, (ii) the periphery of our visual field contains progressively less detail than the center, and (iii) the scene we perceive is the combined result of both eyes.

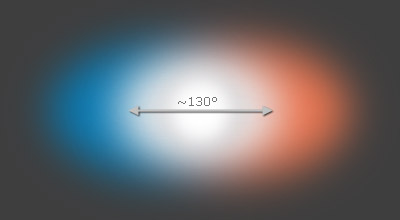

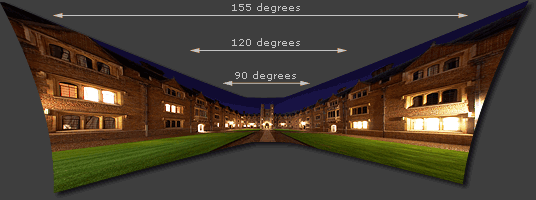

Each eye individually has anywhere from a 120-200° angle of view, depending on how strictly one defines objects as being "seen." Similarly, the dual eye overlap region is around 130° — or nearly as wide as a fisheye lens. However, for evolutionary reasons our extreme peripheral vision is only useful for sensing motion and large-scale objects (such as a lion pouncing from your side). Furthermore, such a wide angle would appear highly distorted and unnatural if it were captured by a camera.

|

||

| Left Eye | Dual Eye Overlap | Right Eye |

Our central angle of view — around 40-60° — is what most impacts our perception. Subjectively, this would correspond with the angle over which you could recall objects without moving your eyes. Incidentally, this is close to a 50 mm "normal" focal length lens on a full frame camera (43 mm to be precise), or a 27 mm focal length on a camera with a 1.6X crop factor. Although this doesn't reproduce the full angle of view at which we see, it does correspond well with what we perceive as having the best trade-off between different types of distortion:

Wide Angle Lens

Wide Angle Lens(objects are very different sizes)

Telephoto Lens

Telephoto Lens(objects are similar in size)

Too wide an angle of view and the relative sizes of objects are exaggerated, whereas too narrow an angle of view means that objects are all nearly the same relative size and you lose the sense of depth. Extremely wide angles also tend to make objects near the edges of the frame appear stretched.

(if captured by a standard/rectilinear camera lens)

By comparison, even though our eyes capture a distorted wide angle image, we reconstruct this to form a 3D mental image that is seemingly distortion-free.

2. RESOLUTION & DETAIL

Most current digital cameras have 5-20 megapixels, which is often cited as falling far short of our own visual system. This is based on the fact that at 20/20 vision, the human eye is able to resolve the equivalent of a 52 megapixel camera (assuming a 60° angle of view).

However, such calculations are misleading. Only our central vision is 20/20, so we never actually resolve that much detail in a single glance. Away from the center, our visual ability decreases dramatically, such that by just 20° off-center our eyes resolve only one-tenth as much detail. At the periphery, we only detect large-scale contrast and minimal color:

Qualitative representation of visual detail using a single glance of the eyes.

Taking the above into account, a single glance by our eyes is therefore only capable of perceiving detail comparable to a 5-15 megapixel camera (depending on one's eyesight). However, our mind doesn't actually remember images pixel by pixel; it instead records memorable textures, color and contrast on an image by image basis.

In order to assemble a detailed mental image, our eyes therefore focus on several regions of interest in rapid succession. This effectively paints our perception:

The end result is a mental image whose detail has effectively been prioritized based on interest. This has an important but often overlooked implication for photographers: even if a photograph approaches the technical limits of camera detail, such detail ultimately won't count for much if the imagery itself isn't memorable.

Other important differences with how our eyes resolve detail include:

Asymmetry. Each eye is more capable of perceiving detail below our line of sight than above, and their peripheral vision is also much more sensitive in directions away from the nose than towards it. Cameras record images almost perfectly symmetrically.

Low-Light Viewing. In extremely low light, such as under moonlight or starlight, our eyes actually begin to see in monochrome. Under such situations, our central vision also begins to depict less detail than just off-center. Many astrophotographers are aware of this, and use it to their advantage by staring just to the side of a dim star if they want to be able to see it with their unassisted eyes.

Subtle Gradations. Too much attention is often given to the finest detail resolvable, but subtle tonal gradations are also important — and happen to be where our eyes and cameras differ the most. With a camera, enlarged detail is always easier to resolve — but counter-intuitively, enlarged detail might actually become less visible to our eyes. In the example below, both images contain texture with the same amount of contrast, but this isn't visible in the image to the right because the texture has been enlarged.

Fine Texture

Fine Texture(barely visible)

Enlarged 16X

Coarse Texture

Coarse Texture(no longer visible)

3. SENSITIVITY & DYNAMIC RANGE

Dynamic range* is one area where the eye is often seen as having a huge advantage. If we were to consider situations where our pupil opens and closes for different brightness regions, then yes, our eyes far surpass the capabilities of a single camera image (and can have a range exceeding 24 f-stops). However, in such situations our eye is dynamically adjusting like a video camera, so this arguably isn't a fair comparison.

|

|

|

| Eye Focuses on Background | Eye Focuses on Foreground | Our Mental Image |

If we were to instead consider our eye's instantaneous dynamic range (where our pupil opening is unchanged), then cameras fare much better. This would be similar to looking at one region within a scene, letting our eyes adjust, and not looking anywhere else. In that case, most estimate that our eyes can see anywhere from 10-14 f-stops of dynamic range, which definitely surpasses most compact cameras (5-7 stops), but is surprisingly similar to that of digital SLR cameras (8-11 stops).

On the other hand, our eye's dynamic range also depends on brightness and subject contrast, so the above only applies to typical daylight conditions. With low-light star viewing our eyes can approach an even higher instantaneous dynamic range, for example.

*Quantifying Dynamic Range. The most commonly used unit for measuring dynamic range in photography is the f-stop, so we'll stick with that here. This describes the ratio between the lightest and darkest recordable regions of a scene, in powers of two. A scene with a dynamic range of 3 f-stops therefore has a white that is 8X as bright as its black (since 23 = 2x2x2 = 8).

Photos on left (matches) and right (night sky) by lazlo and dcysurfer, respectively.

Sensitivity. This is another important visual characteristic, and describes the ability to resolve very faint or fast-moving subjects. During bright light, modern cameras are better at resolving fast moving subjects, as exemplified by unusual-looking high-speed photography. This is often made possible by camera ISO speeds exceeding 3200; the equivalent daylight ISO for the human eye is even thought to be as low as 1.

However, under low-light conditions, our eyes become much more sensitive (presuming that we let them adjust for 30+ minutes). Astrophotographers often estimate this as being near ISO 500-1000; still not as high as digital cameras, but close. On the other hand, cameras have the advantage of being able to take longer exposures to bring out even fainter objects, whereas our eyes don't see additional detail after staring at something for more than about 10-15 seconds.

CONCLUSIONS & FURTHER READING

One might contend that whether a camera is able to beat the human eye is inconsequential, because cameras require a different standard: they need to make realistic-looking prints. A printed photograph doesn't know which regions the eye will focus on, so every portion of a scene would need to contain maximal detail — just in case that's where we'll focus. This is especially true for large or closely viewed prints. However, one could also contend that it's still useful to put a camera's capabilities in context.

Overall, most of the advantages of our visual system stem from the fact that our mind is able to intelligently interpret the information from our eyes, whereas with a camera, all we have is the raw image. Even so, current digital cameras fare surprisingly well, and surpass our own eyes for several visual capabilities. The real winner is the photographer who is able to intelligently assemble multiple camera images — thereby surpassing even our own mental image.

Please see the following for further reading on this topic:

- High Dynamic Range. How to extend the dynamic range of digital cameras using multiple exposures. Results can even exceed the human eye.

- Graduated Neutral Density (GND) Filters. A technique for enhancing the appearance of high contrast scenes similar to how we form our mental image.

- Photo Stitching Digital Panoramas. A general discussion of using multiple photos to enhance the angle of view.